Project Summary

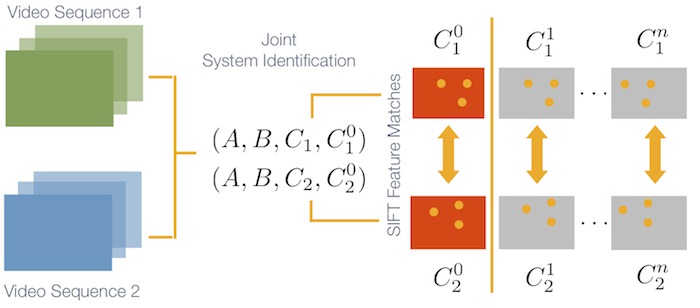

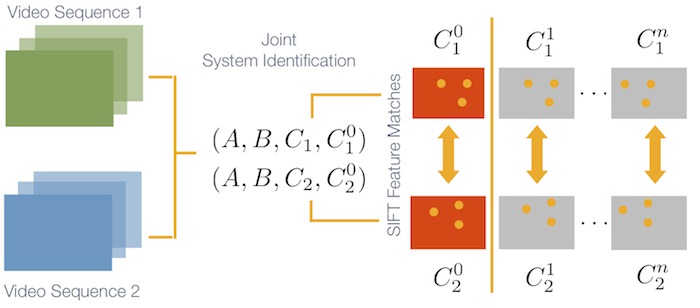

We deal with the problem of spatially and temporally registering multiple

video sequences of a non-rigid dynamical scene. For example, registering

multiple videos of a fountain taken from different vantage points. Our approach

is not based on frame-by-frame or volume-by-volume registration. Instead,

we use the dynamic texture framework, which models the non-rigidity of the

scene with linear dynamical systems encoding both the dynamics and the appearance

of the scene. Our key contribution is to observe that a certain appearance

matrix extracted from the dynamic texture model is invariant with respect

to rigid motions of the camera, thus it can be directly used to register

the video sequences. Our framework is applicable to both synchronized videos

as well as videos that contain a temporal lag between them. The final result is a simple and flexible

method that achieves state-of the- art performance with a significant reduction

in computational complexity.

Publication

[1]

A. Ravichandran and R. Vidal

Video Registration using Dynamic Textures.

IEEE Transactions on Pattern Analysis and Machine Intelligence, January 2011.

[2]

A. Ravichandran and R. Vidal

European Conference on Computer Vision, 2008.

[3]

A. Ravichandran and R. Vidal.

International Workshop on Dynamical Vision, October 2007.

Patents

System and Method For Registering Video Sequences. Patent Publication Number US2010-0260439-A1, October 14, 2010.

Acknowledgements

This work was partially supported by startup funds from JHU, by grants ONR

N00014-05-1083, NSF CAREER 0447739, NSF EHS-0509101, and by contract

JHU APL-934652.