Dynamic Textures

Research Problems

Textures such as grass fluttering in the wind, waves on the ocean, etc posses a characteristic appearance which evolves as a function of time. This temporal evolution is governed by the dynamics of the object. Recent research has been able to model the appearance and its temporal evolution as a linear dynamical system (LDS). One of the biggest challenges when dealing with dynamic textures is the fact that their temporal evolution is non-rigid. Hence, one cannot apply existing methods to solve traditional problems such as segmentation, registration, etc. The scope of our research is to create novel algorithms for solvingsome of the well known problems in computer vision.

More specifically, the problems we are trying to solve are

- Registration of multiple dynamic textures

- Motion estimation from scenes that have camera motion over dynamic textures such as the surface of the ocean

- Dynamic Texture categorization

- Subspace Tracking using algebraic methods

- Segmentation of scenes containing multiple rigid objects and dynamic textures

Dynamic texture Categorization

Existing works on dynamic textures have addressed the problem of categorizing these video sequence. However, all such algorithms assume that each class of dynamic textures contains a single dynamic texture under the same imaging condition such as viewpoint, scale and illumination. Such a scenario is fairly constrained and not very useful for practial appications. In this project, we aim to develop novel methods for dynamic texture categorization that are invariant to changes in

viewpoint, scale, etc. Towards this end, we have developed several methods for categorizing dynamic textures. Each method provides a different set of invariances and exploits different techniques for categorization. A comprehensive list of algorithms we have developed and their details can be found here>>.

|

|

REGISTRATION of MULTIPLE DYNAMIC TEXTURES

We deal with the problem of spatially and temporally registering multiple video sequences of a non-rigid dynamical scene. For example, registering multiple videos of a fountain taken from different vantage points. Our approach is not based on frame-by-frame or volume-by-volume registration.

Instead, we use the dynamic texture framework, which models the non-rigidity of the scene with linear dynamical systems encoding both the dynamics and the appearance of the scene. Our key contribution is to observe that a certain appearance matrix extracted from the dynamic texture model is invariant with respect to the non-rigid motions of the scene, thus it can be directly used to register the video sequences. Our framework is applicable to both synchronized videos as well as videos containing temporal lags. In the latter case, we also propose a method to synthesize novel sequences without the temporal lags. We then show how our model can be extended to the case where there is camera motion in the video sequences. The final result is a simple and flexible method that achieves state-of-the-art performance with a significant reduction in computational complexity. More results >>

|

|

|

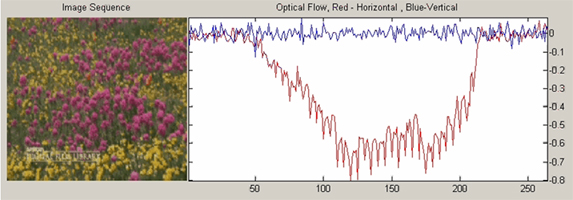

Motion Estimation

As outlined earlier, since the scenes are non-rigid, the classic brightness constancy

constraint is not valid anymore in the case of moving

dynamic texture. Hence, in order to deal with

such image sequences we model the scene as a time

varying dynamic texture. The reasoning behind this

is that the dynamics of the scene are constant

irrespective of the viewpoint of the sequence. Based

on this assumption we propose a Dynamic Texture

Constancy Constraint (DTCC). Here the time varying

appearance model accounts for the motion of the

camera, while the dynamics of the scene is constant.

|

|

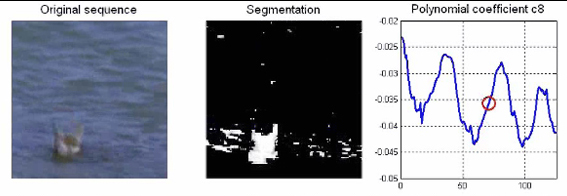

Clustering Moving Hyperplanes

We propose a recursive algorithm for

clustering trajectories lying in multiple moving

hyperplanes. Starting from a given or random initial

condition, we use normalized gradient descent to

update the coefficients of a time varying polynomial

whose degree is the number of hyperplanes and whose

derivatives at a trajectory give an estimate of the

vector normal to the hyperplane containing that

trajectory. As time proceeds, the estimates of the

hyperplane normals are shown to track their true

values in a stable fashion. The segmentation of the

trajectories is then obtained by clustering their

associated normal vectors. The final result is a

simple recursive algorithm for segmenting a variable

number of moving hyperplanes. We test our algorithm

on the segmentation of dynamic scenes containing

rigid motions and dynamic textures, e.g., a bird

floating on water.

|

|

Segmentation

We have proposed algebraic methods and modified the level

sets based approach for segmenting dynamic textures.

Since

dynamic textures can be modeled as linear systems,

if we consider a scene containing multiple dynamic

textures, each dynamic texture will live in its own

observability subspace. We can now use any subspace

clustering methods such as GPCA to segment

these subspaces. But since subspace clustering

algorithms do not take into account the spatial

coherence in images, we proposed a variation of the

standard GPCA, called Spatial GPCA. However algebraic

segmentation is sensitive to noise and does not

impose any temporal coherence, to over come this we

use the level set approach which is known to work

very well in the rigid body case. Level set cost

functions usually contain 2 terms, one for the data

smoothness and the other for the contour length. In

addition we add to this cost function, either a term

for texture or a term to account for the fact that

the image sequence must be an ARX model.

|

|

|

Publication

[1]

Video Registration using Dynamic Textures.

IEEE Transaction on Pattern Analysis and Machine Intelligence, January 2011.

[2]

A Unified Approach to Segmentation and Categorization of Dynamic Textures.

Asian Conference on Computer Vision, November 2010.

[3]

IEEE International Conference on Computer Vision and Pattern Recognition, June 2009.

[4]

European Conference on Computer Vision, October 2008.

[5]

IEEE International Conference on Computer Vision, October 2007.

[7]

Neural Information and Processing Systems, December 2006.

[8]

International Workshop on Dynamical Vision, May 2006.

[9]

IEEE International Symposium on Biomedical Imaging, pages 634-637, April 2006.

[10]

IEEE International Conference on Computer Vision and Pattern Recognition, volume 2, pages 516-521, June 2005